Let's Talk About Software Engineering Principles

Universal Principles of Software Engineering

No matter the type of software you are building, there are some core principles that are foundational to software engineering. These principles are the building blocks that help you create software that is maintainable, scalable, and reliable. While it is important to become and expert in the framework that you are working with, it is even more important to understand and apply these foundational principles that are universal to all software development.

In this article, we will discuss some of the key software engineering principles that every developer should know. These principles are the core pillars of what constitutes good software engineering practices. The ability to identify and apply these principles is what sets a skilled engineer apart from an unskilled one.

DRY (Don't Repeat Yourself)

The DRY principle emphasizes avoiding the duplication of knowledge or information across your codebase. “Every piece of knowledge must have a single, unambiguous, authoritative representation within a system.” — Andy Hunt and Dave Thomas, The Pragmatic Programmer

The primary advantage of minimizing repetition is easier code maintenance and modification. When logic is duplicated across multiple places in your code, a bug fix in one location may be overlooked in others, leading to inconsistent behavior for functions that should act identically. To adhere to DRY, identify repetitive functionality, abstract it into a procedure, class, or other reusable construct, give it a meaningful name, and apply it wherever needed. This approach ensures there is a single point of change, reducing the risk of breaking unrelated functionality and simplifying long-term maintenance.

WET (Write Everything Twice)

The WET principle teaches that duplication is sometimes preferable to the wrong abstraction. While DRY encourages eliminating redundancy, WET acknowledges that overzealous abstraction can lead to complex, hard-to-understand code. The WET principle is used emphasize the importance of clarity and readability over premature optimization.

KISS (Keep It Simple, Stupid)

The KISS principle states that most systems work best if they are kept simple rather than made complex. Simplicity should be a key goal in design, and unnecessary complexity should be avoided. The KISS principle is about finding the simplest solution to a problem that meets the requirements. It is not about writing the least amount of code possible or avoiding advanced techniques. Instead, it is about finding the right balance between simplicity and complexity.

YAGNI (You Ain't Gonna Need It)

The YAGNI principle states that you should not add functionality until it is needed. This principle is about avoiding speculative programming, where you add features that you think you might need in the future but don't currently need. By following the YAGNI principle, you can avoid unnecessary complexity and keep your codebase clean and maintainable. If you find that you do need the functionality later, you can always add it then.

Abstraction

Abstraction is one of the most fundamental principles in software development. To abstract something means to concentrate on the essential aspects while disregarding unnecessary details. Just like you would not have the insides of home appliances exposed in your living room, abstraction allows you to hide the complexity of your code and focus on the high-level concepts.

In software development, abstraction often works hand in hand with encapsulation, which conceals the implementation details of the abstracted components. Abstraction is evident in many forms. For instance, when defining a type, you abstract away the memory representation of a variable. Similarly, when designing an interface or a function signature, you focus on what matters most: the contract for interaction. In class design, you choose attributes relevant to your domain and specific business needs. Countless other examples exist, but the core purpose of abstraction is to enable interaction without needing to understand the underlying implementation details, allowing you to focus on what truly matters.

This principle extends beyond application development. As a programmer, you rely on language syntax to abstract low-level operations with the operating system. The OS abstracts your programming language from direct interactions with the CPU, memory, NIC, and other hardware. The deeper you delve into the system, the more you realize that everything revolves around layers of abstraction.

Abstraction can take many forms, from data abstraction to hierarchical abstraction. A useful guiding principle for applying abstraction is: "Encapsulate what varies." This means identifying parts of a system that are likely to change and defining a concrete interface for them. By doing so, you ensure that even if the internal logic evolves, the way the client interacts with it remains consistent.

For example, imagine you need to perform currency conversions. Initially, you may have only two currencies, so your implementation might look like this:

if (baseCurrency == "USD" && targetCurrency == "EUR") return amount * 0.90

if (baseCurrency == "EUR" && targetCurrency == "USD") return amount * 1.90

However, adding more currencies later would require changes directly in the client code, leading to potential maintenance challenges. A better approach is to abstract the conversion logic into a dedicated method, allowing the client to simply call this method whenever needed:

function convertCurrency(amount, baseCurrency, targetCurrency) {

if (baseCurrency == "USD" && targetCurrency == "EUR") return amount * 0.90

if (baseCurrency == "EUR" && targetCurrency == "USD") return amount * 1.90

if (baseCurrency == "USD" && targetCurrency == "UAH") return amount * 38.24

// Add more cases as needed

}

This encapsulation makes it easier to manage and extend the logic without altering the client code, maintaining both simplicity and flexibility.

DMMT (Don't Make Me Think)

Code should be easy to read and understand without much thinking. If you need to pause, scratch your head or read the code multiple times to understand what it does, then it is not following the DMMT principle. This principle is about making your code as clear and self-explanatory as possible. You should strive to write code that is easy to read, easy to understand, and easy to maintain.

TDA (Tell, Don't Ask)

The TDA principle suggests avoiding asking the object about its state; instead, tell it what to do based on the decision, that is, tell the object what to do.

Look at the following code:

class Account {

constructor (balance) {

this.balance = balance

}

getBalance () {

return this.balance

}

setBalance (amount) {

this.balance = amount

}

}

const account = new Account(100)

if (account.getBalance() > 0) {

account.setBalance(account.getBalance() - 50)

}

In the non-adherent “Tell, Don’t Ask” scenario, the code improperly queries the account’s balance to determine the feasibility of a withdrawal, thereby exposing the account’s internal state and violating the principles of encapsulation. The correct design encapsulates this logic within the Account class itself.

class Account {

constructor (balance) {

this.balance = balance

}

withdraw (amount) {

if (this.balance >= amount) {

this.balance -= amount

}

}

}

const account = new Account(100)

account.withdraw(50)

When adhering to the “Tell, Don’t Ask” principle, the Account class’s withdraw method internally manages the transaction validation logic. It autonomously determines if a withdrawal is permissible without revealing its balance, maintaining the integrity and confidentiality of the account's state.

Boy Scout Rule

There is a simple rule among boy scouts that says: "Always leave the campground cleaner than you found it." This rule can be applied to software development. Whenever you work on a piece of code, make sure to leave it in a better state than you found it. This could mean refactoring the code, adding comments, improving the documentation, or fixing bugs. By following this simple rule, you can help improve the overall quality of the codebase and make it easier for others to work with.

SINE (Simple Is Not Easy)

It's harder to create a simple solution than a complex one. Simplicity requires work, thought, and effort. As programmers, we tend to focus on intricate details like algorithms and data structures, which can sometimes lead to overly complex or over-engineered solutions. However, the most effective solutions are often the simplest. The real challenge lies in striking the perfect balance between simplicity and complexity.

SOLID Principles

The SOLID principles are a set of five design principles that help you create more maintainable, flexible, and scalable software. These principles were introduced by Robert C. Martin in the early 2000s and have since become a cornerstone of object-oriented design. The SOLID principles are:

- Single Responsibility Principle (SRP): Each function or module should do only one thing and do it well. If you need to change how something works, you only have to update one place. This keeps your code easier to read, test, and maintain.

- Open/Closed Principle (OCP): Your code should allow you to add new features or behavior without rewriting existing functions. For example, instead of modifying a function to handle new cases, you could pass in a configuration object or extend it with a new utility function. This helps keep existing code stable while allowing growth.

- Liskov Substitution Principle (LSP): If you replace one piece of your code with another, it should work the same way. For example, a function that fetches data should behave consistently whether it’s getting data from an API or a local cache. This ensures your code is flexible and predictable.

- Interface Segregation Principle (ISP): Keep functions and modules focused on specific tasks and avoid bundling unrelated logic. For example, don’t write a single function that processes a user’s data and also sends emails—split them into two functions. This makes your code easier to test and reuse.

- Dependency Inversion Principle (DIP): High-level modules should not depend on low-level modules. Both should depend on abstractions. Abstractions should not depend on details. Details should depend on abstractions.

LoD (Law of Demeter)

The Law of Demeter (LoD) is a design guideline that helps you write code that is easier to maintain and understand. The Law of Demeter states that a module should not know about the internal workings of the objects it interacts with. Instead, a module should only interact with its immediate neighbors. This principle is also known as the "principle of least knowledge" or the "don't talk to strangers" rule. According to this principle, you should only interact with an object if one of the following conditions is met:

- The object is the current instance of the class (accessed via this).

- The object is a property of the class.

- The object is passed to a method as a parameter.

- The object is created within the method.

- The object is globally accessible.

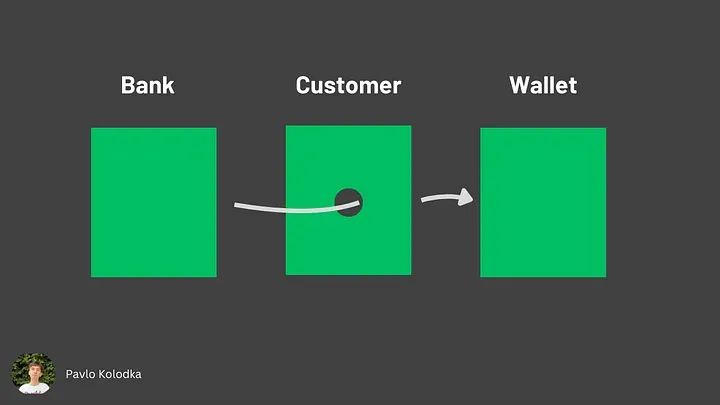

For example, consider a scenario where a customer wants to deposit money into a bank account. This might involve three classes: Wallet, Customer, and Bank. Following the Law of Demeter ensures that each class interacts only with objects it directly relates to, promoting cleaner and more modular code.

class Wallet {

private decimal balance;

public decimal getBalance() {

return balance;

}

public void addMoney(decimal amount) {

balance += amount

}

public void withdrawMoney(decimal amount) {

balance -= amount

}

}

class Customer {

public Wallet wallet;

Customer() {

wallet = new Wallet();

}

}

class Bank {

public void makeDeposit(Customer customer, decimal amount) {

Wallet customerWallet = customer.wallet;

if (customerWallet.getBalance() >= amount) {

customerWallet.withdrawMoney(amount);

//...

} else {

//...

}

}

}

The makeDeposit method demonstrates a violation of the Law of Demeter. While accessing the customer's wallet might be acceptable under LoD (even if it seems illogical from a business perspective), the issue arises when the bank object directly calls getBalance and withdrawMoney on the customerWallet object. This results in the bank interacting with a "stranger" (the wallet) instead of a "friend" (the customer).

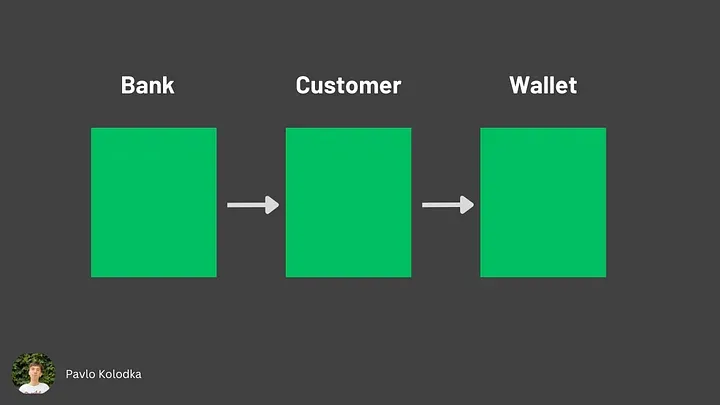

This can be resolved by modifying the Bank class to delegate the deposit operation to the Customer class, which in turn interacts with the Wallet class. This way, each class only interacts with its immediate neighbors, adhering to the Law of Demeter.

class Wallet {

private decimal balance;

public decimal getBalance() {

return balance;

}

public boolean canWithdraw(decimal amount) {

return balance >= amount;

}

public boolean addMoney(decimal amount) {

balance += amount

}

public boolean withdrawMoney(decimal amount) {

if (canWithdraw(amount)) {

balance -= amount;

}

}

}

class Customer {

private Wallet wallet;

Customer() {

wallet = new Wallet();

}

public boolean makePayment(decimal amount) {

return wallet.withdrawMoney(amount);

}

}

class Bank {

public void makeDeposit(Customer customer, decimal amount) {

boolean paymentSuccessful = customer.makePayment(amount);

if (paymentSuccessful) {

//...

} else {

//...

}

}

}

Now, all interactions with the customer's wallet are routed through the Customer object. This abstraction promotes loose coupling, simplifies changes to the logic within the Wallet and Customer classes (as the Bank object doesn't need to concern itself with the internal structure of the customer), and enhances testability.

In general, the Law of Demeter is violated when an object chain exceeds two levels, such as object.friend.stranger, instead of limiting it to object.friend.

SoC (Separation of Concerns)

The Separation of Concerns principle is a design guideline that encourages you to divide your software into distinct sections, each addressing a separate concern. By separating concerns, you can manage complexity, improve maintainability, and facilitate code reuse. The SoC principle is closely related to the Single Responsibility Principle (SRP) of the SOLID principles.

he Separation of Concerns (SoC) principle advocates dividing a system into smaller, independent parts based on its distinct concerns. A "concern" refers to a specific feature or responsibility within the system.

For example:

- In domain modeling, each object can represent a unique concern.

- In a layered architecture, each layer handles its own responsibilities.

- In microservices, each service addresses a specific purpose.

The essence of SoC can be summarized as follows:

- Identify the system's distinct concerns.

- Split the system into independent components that address these concerns individually.

- Connect these components through well-defined interfaces.

This approach closely aligns with the abstraction principle. Following SoC results in code that is modular, easy to understand, reusable, built on stable interfaces, and easier to test.

GRASP

The General Responsibility Assignment Software Patterns (GRASP) is a collection of nine principles introduced by Craig Larman in his book Applying UML and Patterns. These principles guide object-oriented design and are built upon well-established programming practices, much like the SOLID principles.

High Cohesion

Keep related functionalities and responsibilities together.

The principle of high cohesion emphasizes managing complexity by grouping closely related functionalities. In this context, cohesion measures how closely the responsibilities of a class are related.

A class with low cohesion handles tasks unrelated to its primary purpose or tasks that could be delegated to other components. Conversely, a highly cohesive class typically has a small number of methods, all directly related to its core functionality. This design approach improves code maintainability, readability, and reusability.

Low Coupling

Reduce dependencies between unstable components.

This principle focuses on minimizing the dependencies between elements to reduce the risk of side effects from code changes. Coupling refers to how strongly one component relies on another.

Highly coupled elements are tightly dependent on each other, making it difficult to modify one without affecting the other. This increases the complexity of maintenance, reduces code reuse, and hampers readability. In contrast, low coupling promotes independence between components, making them easier to change and less prone to unintended impacts.

Coupling and Cohesion Together

The principles of coupling and cohesion are interrelated. Classes with high cohesion typically have weak connections to other classes, resulting in low coupling. Similarly, when two classes are loosely coupled, they often demonstrate high cohesion by focusing on their specific responsibilities. Adhering to these principles results in a more modular, maintainable, and robust system design.

Information expert

Assign responsibilities to the object that has the necessary data.

The Information Expert pattern addresses the question of how to assign responsibility for knowledge or tasks. According to this pattern, the object that directly holds the required information is considered the "information expert" and should take on the related responsibility.

For example, in the context of the Law of Demeter applied to a customer and a bank:

- The Wallet class is the information expert for managing the balance.

- The Customer class is the information expert for its own internal structure and behavior.

- The Bank class is the information expert for operations within the banking domain.

When a responsibility requires information from various parts of the system, intermediate information experts can help gather the data. This ensures objects retain their encapsulation, reducing coupling and enhancing maintainability.

Creator

Assign the responsibility of creating an object to a related class.

The Creator pattern answers the question of which class should create a new object instance. According to this principle, the responsibility for creating an object of class X should be given to a class that:

- Aggregates X.

- Contains X.

- Records X.

- Closely uses X.

- Has the necessary data to initialize X.

This approach promotes low coupling by ensuring that the creation responsibility is assigned to a class already related to the new object, avoiding unnecessary dependencies.

Controller

Assign the responsibility of handling system events to a specific class.

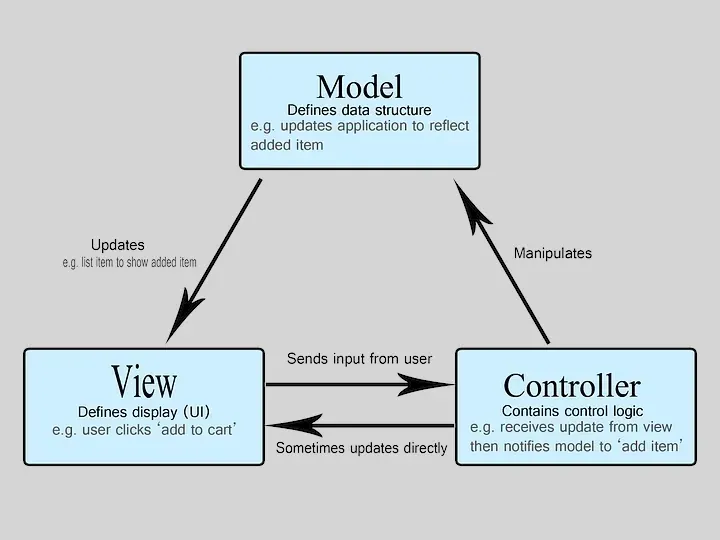

The Controller pattern delegates the responsibility for receiving and directing user interactions or system messages to a designated object. The controller serves as the first point of contact between the UI and the domain layer, routing requests appropriately.

Typically, one controller manages related use cases. For instance, a UserController might handle all interactions involving the User entity. A controller should not contain business logic and should remain as lightweight as possible. Its role is to delegate tasks to the appropriate classes, not to perform them directly. In MVC-like design patterns, the controller acts as an intermediary between the model and the view. By introducing the controller, the model becomes independent of direct interaction with the view, promoting cleaner separation of concerns and more maintainable code.

Indirection

To achieve low coupling, delegate responsibilities to an intermediary class.

As Butler Lampson famously said, “All problems in computer science can be solved by another level of indirection.” The principle of indirection follows the same logic as the dependency inversion principle: introducing an intermediary layer between two components to make their connection indirect. This approach supports weak coupling and offers all the associated benefits, such as improved flexibility and maintainability.

Polymorphism

When behaviors vary based on type, use polymorphism to delegate responsibility to the specific types.

If you encounter code with extensive if or switch statements that check object types, it likely suffers from a lack of polymorphism. Extending such code requires modifying the conditions, adding more checks, which can lead to poor design and increased complexity.

Polymorphism addresses this by allowing related classes with different behaviors to share a unified interface. This results in interchangeable components, each responsible for its own functionality. Depending on the programming language, this can be achieved through interfaces, inheritance, or overriding methods. Polymorphism enables pluggable, extendable components without altering unrelated code, promoting a design that aligns with the open-closed principle.

However, it’s essential to apply polymorphism judiciously. Only introduce abstractions when variability in components is expected. Avoid creating unnecessary abstractions for stable components, such as built-in classes or frameworks, as this adds complexity without value.

Pure Fabrication

To achieve high cohesion, assign responsibilities to a logically convenient class.

Sometimes adhering to high cohesion and low coupling principles requires creating entities that don’t directly exist in the domain. These are called pure fabrications. These entities are designed solely to fulfill specific responsibilities and improve the system's design.

For instance, the Controller pattern is a pure fabrication, as it bridges interactions between different components. DAO (Data Access Object) or repository classes are fabrications that handle data access. While domain classes could handle data access themselves (since they are information experts), this would violate high cohesion by mixing unrelated concerns, such as behavior and data management. It would also increase coupling with the database interface and lead to code duplication, as similar data management logic would be scattered across multiple domain classes.

Pure fabrication allows grouping related behavior into reusable, specialized classes, resulting in better cohesion, reduced dependency, and a clean separation of concerns.

Protected Variations

Safeguard anticipated changes by defining a stable contract.

The Protected Variations principle focuses on creating systems resilient to change by introducing stable contracts that shield other components from the effects of variation. This principle leverages several design techniques:

- Indirection to switch between implementations.

- Information Expert to delegate responsibilities effectively.

- Polymorphism to create modular, interchangeable components.

Protected Variations is a foundational concept that underpins many design principles and patterns. By anticipating areas of potential change, developers can design systems that are easier to adapt without disrupting unrelated components.

Craig Larman explains the importance of this principle as follows:

At one level, the maturation of a developer or architect can be seen in their growing knowledge of ever-wider mechanisms to achieve PV, to pick the appropriate PV battles worth fighting, and their ability to choose a suitable PV solution. In the early stages, one learns about data encapsulation, interfaces, and polymorphism — all core mechanisms to achieve PV. Later, one learns techniques such as rule-based languages, rule interpreters, reflective and metadata designs, virtual machines, and so forth — all of which can be applied to protect against some variation. — Craig Larman, Applying UML and Patterns

CoC (Convention over Configuration)

The Convention over Configuration (CoC) principle promotes the use of predetermined conventions rather than explicit configurations. By following these conventions, developers can reduce the amount of necessary configuration and automatically benefit from functionality, simplifying the development process and improving code readability.

This principle is widely applied in many tools and frameworks, and developers often benefit from it without even realizing it.

For example, the structure of a Java project with directories like src/main/java, src/main/resources, and src/test/java follows the CoC principle. By placing test files in the src/test/java directory, the tests are automatically executed when running the tests. Similarly, the “Test” suffix in JUnit file names also follows the Convention over Configuration principle.

Applying the CoC principle also facilitates collaboration among team members as they share a common understanding of conventions and can focus on business logic rather than configuration details.

Composition over Inheritance

The Composition over Inheritance principle advocates for using class composition instead of inheritance to promote code reusability and avoid rigid dependencies between classes. According to this principle, it’s better to construct complex objects by combining simpler objects rather than creating a complex inheritance hierarchy.

Applying the composition principle brings several advantages. Firstly, it allows greater flexibility in terms of code reuse. Instead of tightly binding a class to an inheritance hierarchy, composition enables the construction of objects by assembling reusable components. It also facilitates code modularity as components can be developed and tested independently before being combined to form more complex objects.

Furthermore, applying composition reduces code complexity and avoids problems with deep and complex inheritance hierarchies. By avoiding excessive inheritance, the code becomes more readable, maintainable, and less prone to errors. Composition also allows focusing on relationships between objects rather than the details of internal implementation in a parent class.

Let’s take a concrete example to illustrate the application of the composition principle. Suppose we are developing a file management system. Instead of creating a complex inheritance hierarchy with classes like “File,” “Folder,” and “Drive,” we can opt for a composition approach where each object has a list of simpler objects, such as “File” objects and “Folder” objects. This allows building flexible file structures and modular manipulation of objects, avoiding the constraints of inheritance.

By applying the Composition over Inheritance principle, we promote code reusability, modularity, and object flexibility. This leads to clearer, more maintainable, and scalable code while avoiding issues related to complex inheritance hierarchies.

Modularity, Coupling & Cohesion

In the early days of a project, speed is often the only metric that matters. We hack together features, share global variables for convenience, and throw helper functions into a generic utils file. But as the codebase grows, velocity slows. Bugs become harder to track, and a simple change in the billing system somehow breaks the user profile page.

This is the difference between "coding" and Software Engineering.

As noted by industry veteran Dave Farley, software engineering is fundamentally about managing complexity. We cannot hold millions of lines of code in our heads. To build robust, scalable systems, we must break that complexity down into manageable, self-contained units. This is the essence of Modularity.

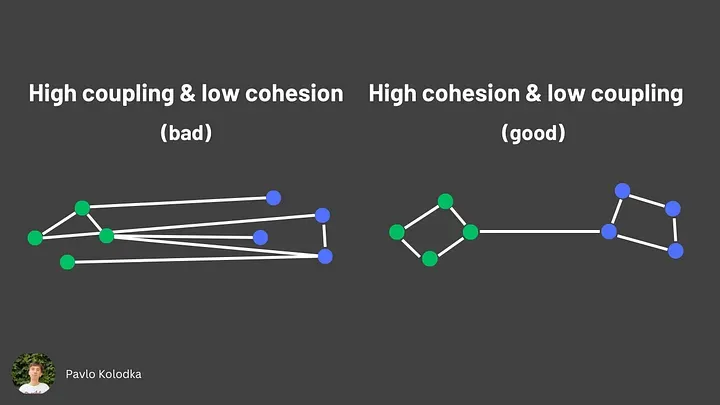

But how do you know if your modules are actually "good"? The answer lies in two opposing metrics: Coupling and Cohesion.

The Golden Rule

The mantra for modular software architecture is simple to say but hard to master:

Aim for Low Coupling and High Cohesion.

If you visualize your code as dots on a graph, you don't want a scattered cloud where everything connects to everything. You want tight, dense clusters (modules) separated by clear white space.

1. Coupling: The Blast Radius

Coupling measures the degree of interdependence between modules. It answers the question: "If I change Module A, how likely is it that Module B breaks?"

In a tightly coupled system, the "blast radius" of a change is massive. You touch a line of code in the inventory service, and the checkout service crashes. Your goal is Low Coupling, where modules interact via strict, simple interfaces without knowing the implementation details of their peers.

The Spectrum of Coupling (From Best to Worst)

Not all dependencies are created equal. Here is how they rank:

- Data Coupling (The Gold Standard): Modules share only the specific data they need.

- Example: Passing a

userId: stringto a function. The function knows nothing else about the user entity.

- Example: Passing a

- Stamp Coupling: Modules share a composite data structure, even if they only need part of it.

- Example: Passing a massive

Userobject (containing preferences, history, and billing info) to a function that only needs theemail. If theUserstructure changes, this function might break unnecessarily.

- Example: Passing a massive

- Control Coupling: One module passes a flag to control the internal logic of another.

- Example:

processOrder(order, true)wheretruetells the function to "rush" the order. This is bad because the caller knows too much about the internal logic of the callee. Create specific functions for specific intents. If there is shared logic, extract that into a private helper function.

- Example:

- Common/Global Coupling: Modules share global data. This is dangerous. A change to the global state affects every module in the system, often unpredictably.

- Content Coupling (The Anti-Pattern): One module directly modifies the internal data or code of another. This effectively means they are the same module, just in different files.

2. Cohesion: The Internal Glue

Cohesion measures how closely related the elements inside a single module are. It answers the question: "Do these functions and variables actually belong together?"

In a system with High Cohesion, a module has a single responsibility. If you open a file named OrderValidator, you expect to find code related to validating orders—and nothing else.

The Spectrum of Cohesion (From Best to Worst)

- Functional Cohesion (The Goal): Every element in the module contributes to a single, well-defined task.

- Example: A

TaxCalculatormodule where every function helps calculate the final tax.

- Example: A

- Sequential Cohesion: The output of one part becomes the input of the next. It’s like a factory assembly line or a Unix pipe.

- Communicational Cohesion: Elements operate on the same data but perform different tasks.

- Example: A module that reads a record from a database and then prints it.

- Logical Cohesion: Elements are grouped because they do the "same kind of thing," even if they are unrelated.

- Example: The infamous

MathUtilsorHelpersfolder. It contains a string parser, a date formatter, and a mathematical constant. These things do not belong together; they are just "utilities."

- Example: The infamous

- Coincidental Cohesion: Elements are grouped arbitrarily (e.g., "I put this here because the file size was small"). This is a "dumping ground" and is the worst form of cohesion.

Adhering to these principles isn't just about academic purity; it has pragmatic benefits for the lifecycle of your software:

- Testability: A highly cohesive module with low coupling can be unit tested in isolation. You don't need to spin up the entire application to test one calculation.

- Maintainability: When code is modular, you know exactly where to look to fix a bug. If the bug is in the "Search" feature, you go to the Search module.

- Reusability: A module that relies on global state cannot be reused. A module that only relies on its inputs (Data Coupling) can be dropped into any other project instantly.

Software engineering is an iterative process. You rarely get the boundaries right on day one. However, by constantly evaluating your code against the metrics of Coupling and Cohesion, you stop building "spaghetti code" and start building systems.

The next time you write a function, ask yourself: Does this function know too much about the rest of the system? (Coupling). And then ask: Does this function really belong in this file? (Cohesion). The answers will guide you toward better architecture.